Leveraging LLMs for Phishing email detection

Learn how to build a phishing email detection system using Large Language Models (LLMs) and Python. This practical guide covers everything from setup and implementation to real-world testing.

Introduction

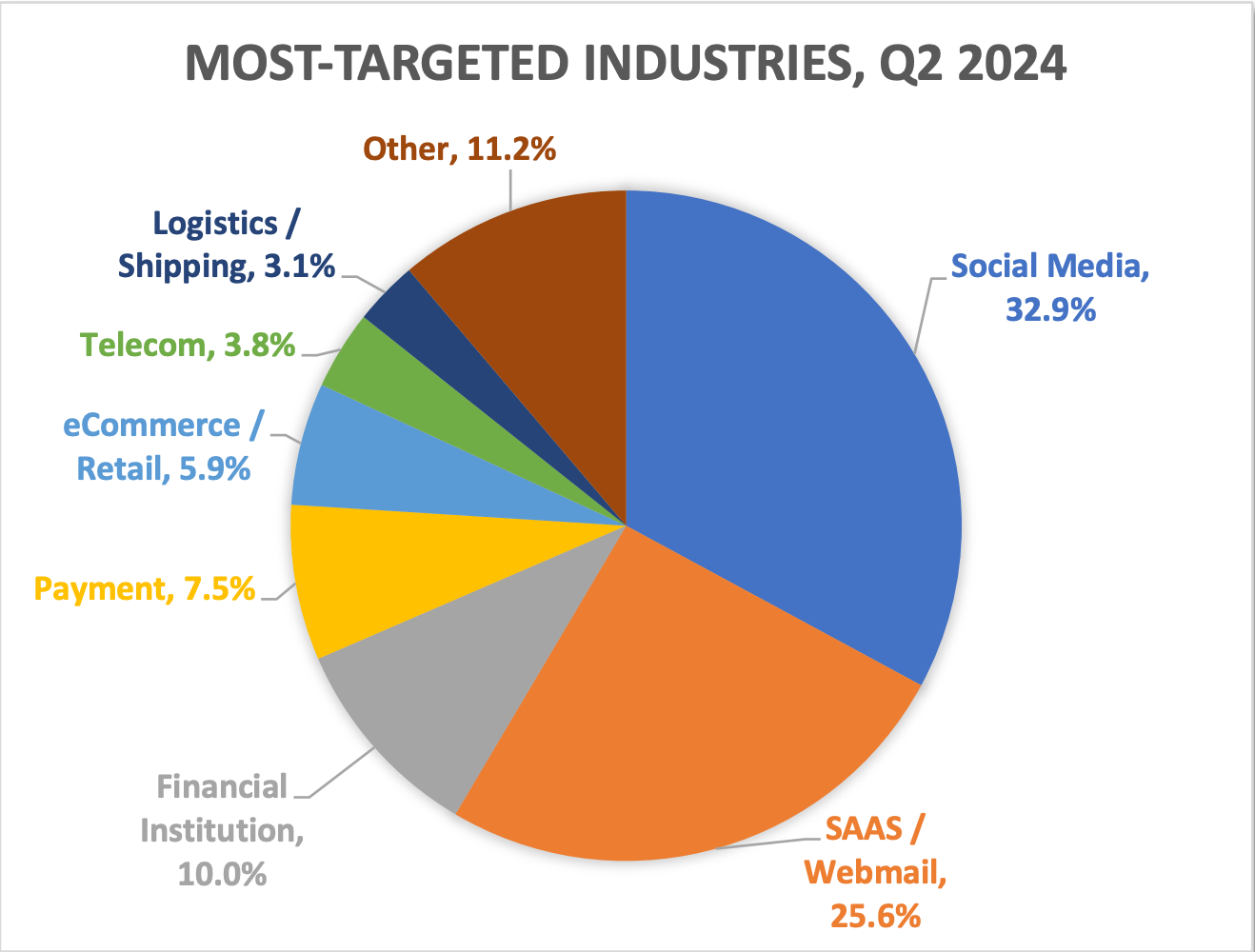

Phishing remains a top cybersecurity threat in 2024, with cybercriminals continually evolving their tactics. Traditional rule-based systems struggle to keep up, highlighting the need for adaptive solutions.

Enter LLMs: advanced models capable of understanding context and detecting subtle phishing cues that static methods miss.

Recent data from the Anti-Phishing Working Group shows nearly 1.9 million phishing attacks in the past year, with 877,536 in Q2 2024 alone. Business Email Compromise (BEC) scams have also surged, with average requested amounts rising to $89,520 per incident. The threat landscape is more active than ever, demanding innovative defenses.

In this article, I’ll show you how to leverage LLMs to build an effective phishing email analyzer, expanding on our previous cybersecurity lab guide.

Source

Key takeaways

In this guide, you’ll learn how to:

-

Set up a free, LLM-powered phishing analysis lab

-

Build a custom phishing detection model

-

Create an intuitive interface for easy email analysis

-

Test your system using real-world phishing data.

-

Gain valuable insights to improve your detection strategies.

Disclaimer

While LLM-based phishing detection provides powerful adaptability and contextual understanding, it shouldn’t be relied upon as a standalone solution. For comprehensive security, it’s essential to integrate this approach with traditional detection methods. Static analysis tools that flag known Indicators of Compromise (IoCs), such as suspicious URLs or file attachments, remain vital components of a robust security strategy.

Setting up your phishing analysis lab

We’ll use Google Colab, a free cloud-based platform that offers access to GPU resources, ideal for running LLM-based analyses. Additionally, we’ll leverage Ollama, a tool that simplifies managing large language models. If you’re new to these technologies, check out our previous article for an introduction. How to build a free LLM cybersecurity lab with Google Colab and Ollama

1. Preparing your environment

Start by opening our Phishing Analysis Colab Notebook. This Google Colab environment provides free access to GPU resources, perfect for running our LLM-based analysis. Google Colab Ollama

2. Installing essential tools

Next, we’ll install Ollama, a tool for managing LLMs, along with necessary Python libraries to support our analysis.

!curl https://ollama.ai/install.sh | sh

%pip install openai pydantic instructor ipywidgets beautifulsoup4 pandas tqdm chardet matplotlib seaborn wordcloud

3. Downloading a model

For this lab, we’ll use the Google gemma2 model in its 9B version quantitized to INT4, known for its strong performance (specially in data extraction tasks) regarding its size.

# Set the MODEL variable

OLLAMA_MODEL = "gemma2:9b-instruct-q4_K_M"

# Run the ollama pull command

!ollama pull {OLLAMA_MODEL}

Gemma 2 is now available to researchers and developers Quantization

4. Implementing the phishing email analyzer

We use the Instructor library with Pydantic to create robust data models for our analysis. Think of these models as templates for organizing data, much like a recipe organizes ingredients and steps.

By defining these models upfront, we ensure that our analysis outputs are consistently structured, making them easier to handle programmatically.

from pydantic import BaseModel, Field

from enum import Enum

from typing import List

class PhishingProbability(str, Enum):

LOW = "low"

MEDIUM = "medium"

HIGH = "high"

class SuspiciousElement(BaseModel):

element: str

reason: str

class SimplePhishingAnalysis(BaseModel):

is_potential_phishing: bool

phishing_probability: PhishingProbability

suspicious_elements: List[SuspiciousElement]

recommended_actions: List[str]

explanation: str

We try to not define complexed structures because LLMs are not so strong to deal with many parameters. If you want to extract more fields, you can divide the extraction in multiple prompts. GitHub - jxnl/instructor: structured outputs for llms

5. Creating the analysis function

For the system prompt (how the LLM will be initialized), we will use a simple instruction:

Analyze the provided email content and metadata to determine if it’s a potential phishing attempt. Provide your analysis in a structured format matching the SimplePhishingAnalysis model.

Then, our core analysis function leverages the ollama local endpoint:

def analyze_email(email_content: str) -> SimplePhishingAnalysis:

client = instructor.from_openai(

OpenAI(

base_url="http://127.0.0.1:11434/v1",

api_key="ollama",

),

mode=instructor.Mode.JSON,

)

resp = client.chat.completions.create(

model=OLLAMA_MODEL,

messages=[

{

"role": "system",

"content": "Analyze the provided email content and metadata to determine if it's a potential phishing attempt. Provide your analysis in a structured format matching the SimplePhishingAnalysis model.",

},

{

"role": "user",

"content": email_content,

},

],

response_model=SimplePhishingAnalysis,

)

return resp

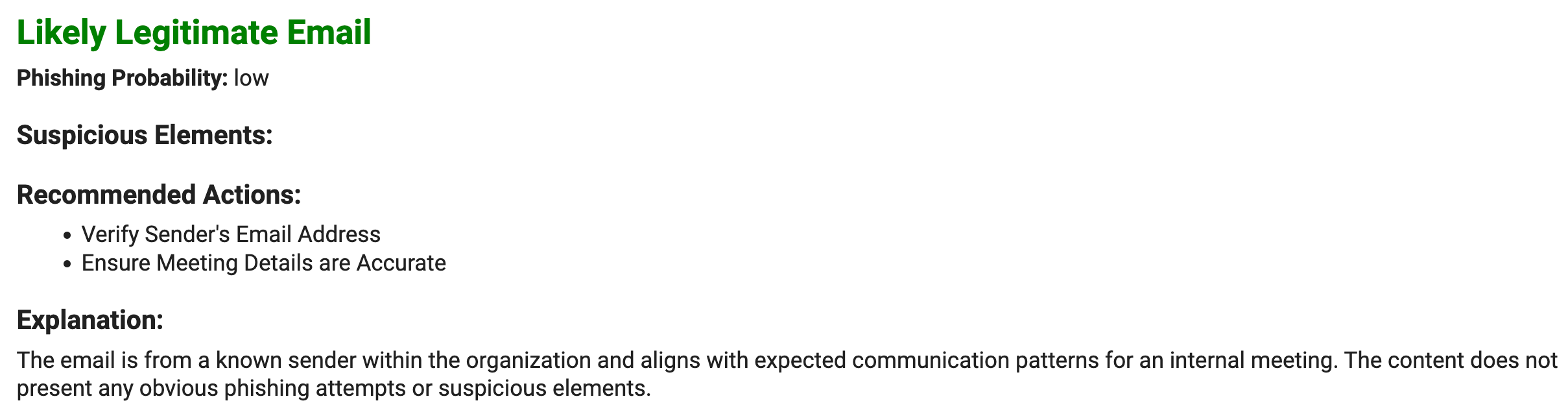

We then test our work using two mock emails (one phishing and one legitimate).

Legitimate mail

From: [email protected]

To: [email protected]

Subject: Quarterly Review Meeting - Thursday, 2 PM

Dear Team,

I hope this email finds you well. I'm writing to remind everyone about our upcoming Quarterly Review Meeting scheduled for this Thursday at 2 PM in the main conference room.

Agenda:

1. Q2 Performance Overview

2. Project Updates

3. Q3 Goals and Strategies

4. Open Discussion

Please come prepared with your team's updates and any questions you may have. If you're unable to attend in person, you can join via our usual video conferencing link:

https://company.zoom.us/j/123456789

Don't forget to bring your laptops for the interactive portion of the meeting.

If you have any questions or need to discuss anything before the meeting, feel free to drop by my office or send me an email.

Looking forward to seeing everyone on Thursday!

Best regards,

Jennifer Smith

Head of Operations

Company Inc.

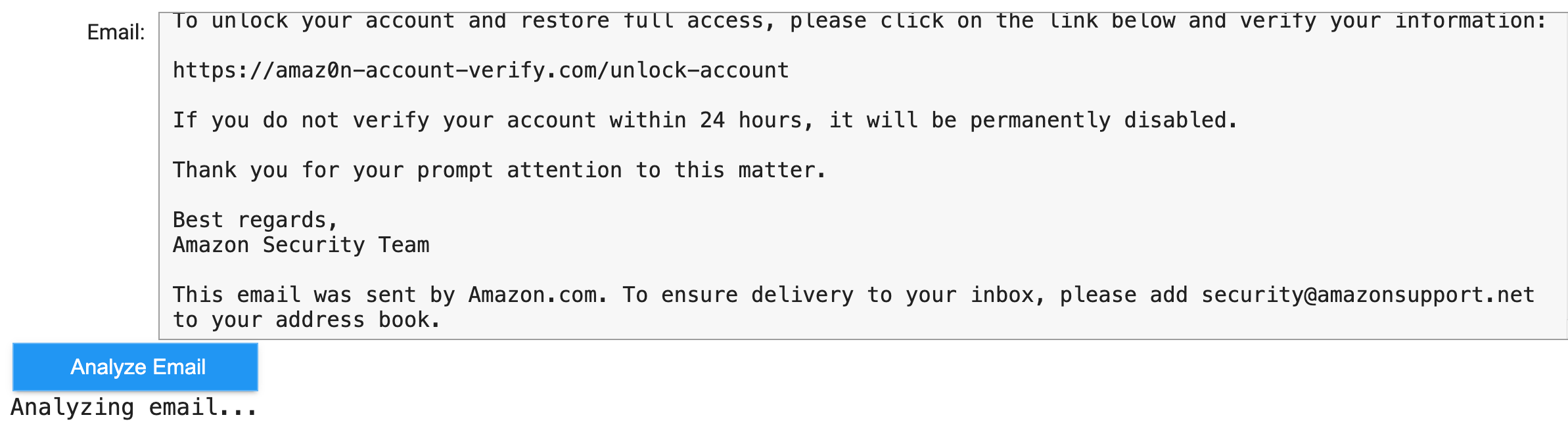

Phishing mail

From: [email protected]

To: [email protected]

Subject: Urgent: Your Amazon Account Has Been Locked

Dear Valued Customer,

We have detected unusual activity on your Amazon account. To prevent unauthorized access, we have temporarily locked your account for your protection.

To unlock your account and restore full access, please click on the link below and verify your information:

https://amaz0n-account-verify.com/unlock-account

If you do not verify your account within 24 hours, it will be permanently disabled.

Thank you for your prompt attention to this matter.

Best regards,

Amazon Security Team

This email was sent by Amazon.com. To ensure delivery to your inbox, please add [email protected] to your address book.

6. Building a user-friendly interface

Creating an intuitive interface is crucial for making our powerful phishing analyzer accessible to a wider audience. With just a few clicks, anyone can leverage the sophisticated detection capabilities of LLMs without needing to understand the underlying complexity.

To make our tool more accessible, we can create a simple GUI using IPython widgets:

Then you get your analysis result printed directly after LLM has processed it.

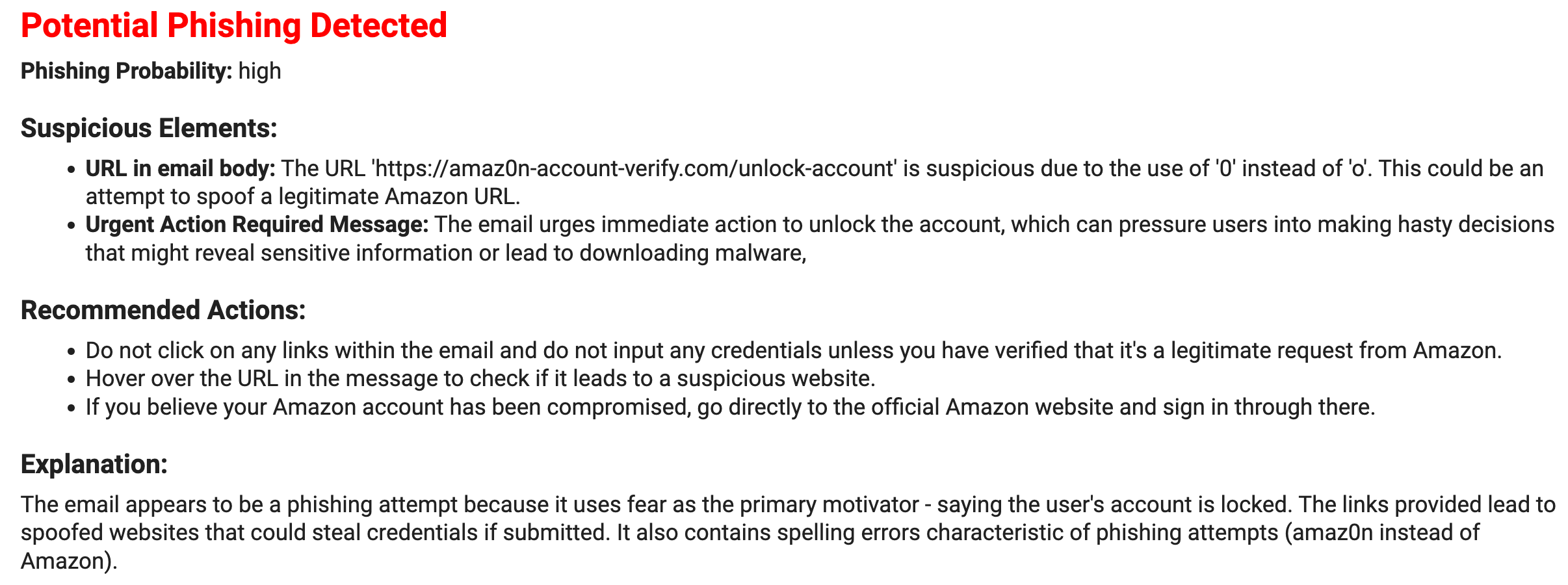

It worked for the phishing email detection!

And also for the legitimate email.

This interface allows for easy email input and instant analysis results. For production environments, consider developing a dedicated API using FastAPI to handle suspicious email routing and analysis at scale. FastAPI FastAPI framework, high performance, easy to learn, fast to code, ready for productionfastapi.tiangolo.com

7. Real-world testing

Testing our analyzer against actual phishing emails is essential to validate its effectiveness and uncover areas for improvement. By using real-world datasets like phishing_pot, we can ensure our tool is ready to face the evolving threats in the wild.

-

Download and extract the dataset

-

Process the emails

-

Run our LLM-based analysis

-

Compile and interpret the results GitHub - rf-peixoto/phishing_pot: A collection of phishing samples for researchers and detection…

Here’s a snippet of our analysis process:

results = []

for email_content in tqdm(email_data, desc="Analyzing emails"):

try:

analysis = analyze_email(email_content)

results.append(analysis)

except Exception as e:

print(f"Error analyzing email: {str(e)}")

print(f"Analyzed {len(results)} emails")

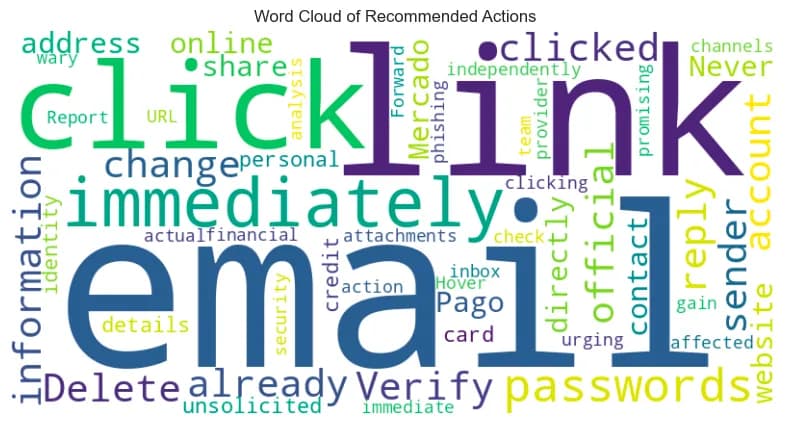

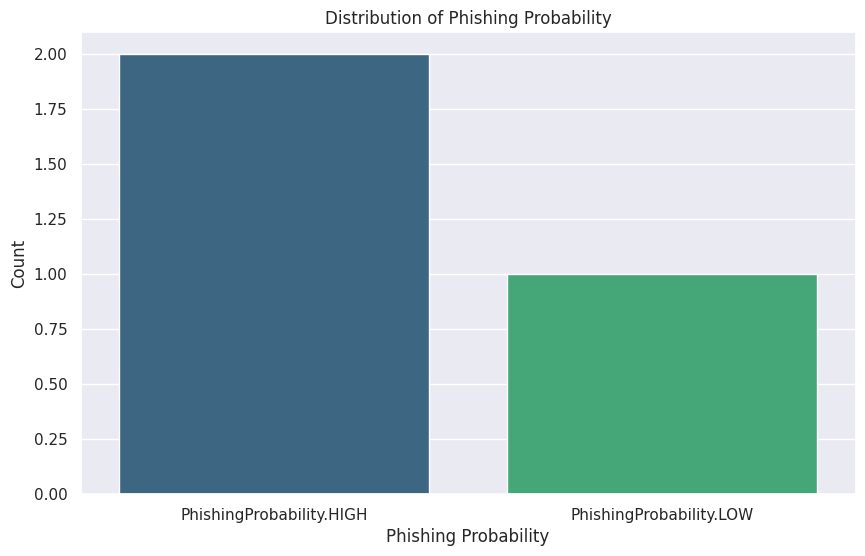

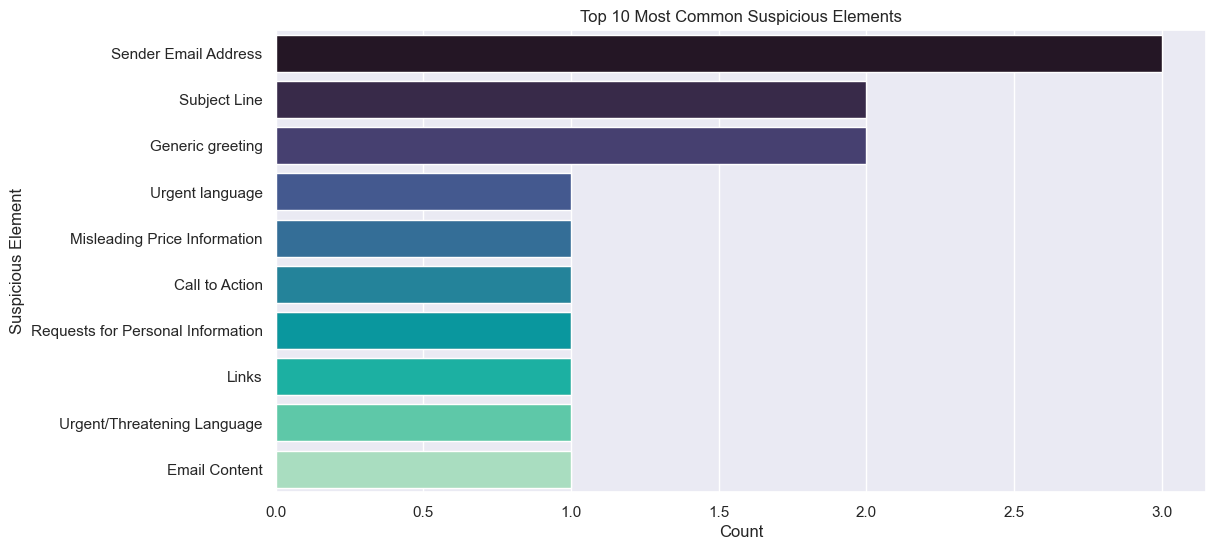

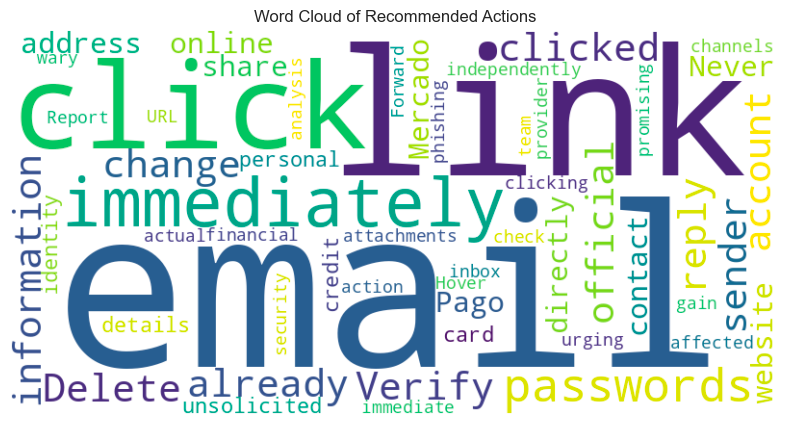

8. Analyzing the results

Once the dataset has been processed, we can analyze the results using Python libraries such as pandas, seaborn, and matplotlib to visualize performance and identify areas for improvement.

Fine-tuning LLMs for phishing detection

While our current approach uses a general-purpose LLM, significant improvements can be achieved through fine-tuning. I will not detailed this process because I’m not so familiar with it but I can give you some tips if you want to work on such kind thing.

Consider the following process:

-

Collect a large dataset of labeled phishing and legitimate emails. We already have a nice dataset of phishing emails in the phishing-pot repo.

-

Prepare, clean the data

-

Fine-tune the LLM of your choice using unsloth AI

-

Evaluate the fine-tuned model against a held-out test set.

This process can dramatically improve detection accuracy but is costly. Thats why unsloth AI is using some optimization techniques to consumme less memory in the fine-tuning process while maintaining solid performance.

To dive deeper into fine-tuning LLMs, check out these helpful resources: GitHub - unslothai/unsloth: Finetune Llama 3.1, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less… Google Colab

Conclusion

Our LLM-powered phishing analyzer marks a significant advancement in applying AI to cybersecurity challenges. By leveraging the advanced language understanding capabilities of LLMs, we’ve created a tool that rapidly identifies potential phishing threats.

As you experiment with this lab, consider integrating it with other security tools, such as:

-

Email filtering systems

-

Security awareness training programs

-

Threat intelligence feeds

The future of cybersecurity lies in the intelligent application of AI technologies like LLMs. By staying curious and continually adapting our tools and strategies, we can build more resilient defenses.

Next Steps (your homeworks !)

-

Experiment with different LLMs and datasets to optimize the analyzer’s performance.

-

Develop a custom scoring system for phishing probability.

-

Integrate the analyzer with your existing email security infrastructure to enhance threat detection.

Stay curious. Stay secure.